[ICACE2022] Automatic hand tracking system control with 4-axis light stand for surgical light

Copyright © The Korean Society of Marine Engineering

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License (http://creativecommons.org/licenses/by-nc/3.0), which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

Currently, the medical field is aided by several robots. However, most surgical light stands are cumbersome to manually adjust the position of light. The accuracy of the algorithm was confirmed by developing and prototyping a surgical light-tracking algorithm that automatically illuminated the light by tracking the position of the doctor's operating hand in real time. An algorithm was developed to maintain a constant distance from a specific object using an ultrasonic sensor and image sensor. This was verified by maintaining a constant distance of 15 cm from the hand and 25 cm from the floor by controlling the four motors. The ultrasonic sensor of the customized stand measures the reciprocating distance between the hand and the floor and outputs data in real time to maintain a constant distance. The image sensor detects the hand movements and outputs the data regarding its position concurrently. The motor then automatically measures the movements. There was a gap of approximately 1–2 cm between the distance the stand was actually held with the object and the distance we set. This is due to the difference observed from the center of the hand, and considering the part of the light shining on it, the difference is so small that the doctor cannot recognize it.

Keywords:

4-axis light stand, Hand tracking system, Algorithm, Sensor1. Introduction

Biomedical engineering is the convergence of medicine and engineering, and it makes a significant contribution to the development of medicine by incorporating engineering knowledge into the medical field [1]. In particular, automation technology has been grafted into the medical field, reducing the number of tasks and the manpower required for operation. In addition, automation technology is widely applied in the medical field to reduce human errors through optimization [2]-[3].

Automation systems employed in the medical field are widely applied to medical imaging equipment, rehabilitation equipment, and surgical robots. They contribute significantly to improving medical quality, and have a significant effect in reducing treatment duration and medical costs by reducing human errors. With the aid of surgical robots, a surgery can be performed through a small incision. This is an efficient and innovative surgical method that automatically corrects the hand tremor of the surgeon [4]-[5].

The surgical robot moves while properly rotating the surgical tool or repeats a constant positive/reverse rotation, and experienced surgeons control the robot to perform the surgery. However, researchers [6]-[11] focused only on robot control by determining the position of the surgeon and adjusting the robot arm, not automatically moving, and research has not been conducted to help the surgeon judge. Although lighting is very important to assist the surgeon's vision, it is not automated and often moved manually. Most researchers have focused on robot control that determines the surgeon and adjusts the robot arm, rather than moving automatically, and no research has been conducted to help the surgeon judge. In particular, lighting is very important to help the doctor's visual focus in a situation where a desired area needs to be brightly and closely viewed during treatment, but it is often not automated or manually operated. The difference from the previous study is that the system that helps the doctor's decision does not focus on controlling the robot.

This study implements an automatic motion-tracking algorithm that operates using an ultrasonic sensor and a camera-based motion sensor to automatically illuminate the hand and control four motors under the assumption that the surgeon moves the robot arm during surgery. In this study, an axial light stand is built and the accuracy of the algorithm is verified. Surgical hand tracking involves tracking and illuminating a person's hand in real time. Hand-tracking methods are divided into two types: wearable- and vision-based. The wearable method uses equipment such as gloves on the hands. However, the user may feel uncomfortable with additional equipment, and it is judged that it is not suitable as medical equipment that requires detailed work. Vision-based hand tracking for medical purposes has received considerable attention [12]. A vision sensor is an artificial intelligence (AI) camera that recognizes objects through object tracking, object recognition, line tracking, and color recognition. This is used to learn the color of a human hand, which is an object, and various types of hands. However, in vision-based methods, if the pose or shape of the hand is changed, the sensor may not be able to recognize it. To overcome this limitation, we designed an ultrasonic sensor along with a visual sensor. This compensates for the vision sensor's failure to recognize the target by maintaining a constant distance between the floor and hand. The camera sensor and ultrasonic sensor, which are vision-based sensors, satisfy the function of the light stand that tracks and follows hands in real time. Thus, it is feasible to develop an upgraded surgical robot by implementing a safe system while maintaining a certain distance from the robot arm.

2. Experimental setup and control algorithm

2.1 Experimental setup

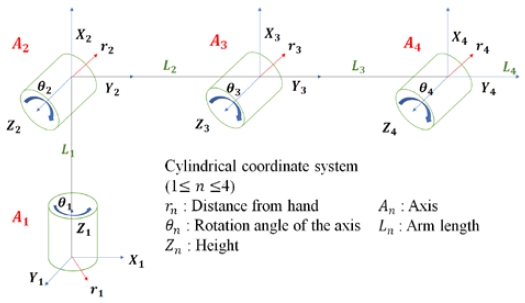

Figure 1 shows four degrees of freedom (DoF) for rotation in the direction perpendicular to base plate A1 and the z-axis. In Figure 1, A1 is independently controlled to rotate to the left and right through the vision sensor, and it is a control action with A2 and A4. It independently controlled the distance adjustment motor of A3 and adjusted the distance from the floor. It was designed to maintain the distance from the hand by controlling A2, A3, and A4 by measuring the return time of the pulse reflected from the hand through the ultrasonic sensor.

As shown in Figure 1, to control L1, L2, L3, and L4 corresponding to the arm of the stand, the rotational force of the stepper motor is transmitted through a timing belt and pulley [13]. Figure 1 shows a yaw-roll-roll-roll type, in which all axes move by rotation. L1 is directly connected to the motor and base plate, and L2:200 mm, L3:190 mm, L4:180 mm were set. L1 is a truss structure with increased strength that reduces the load occupied by each arm structure to reduce the load on the motor. Ultrasonic sensors on both sides of the distal end of L4 are connected to A4 in Figure 1, and lighting and vision sensors in the center are attached to the right side of the bottom plate in the vertical and horizontal directions. The motors of A1, A2, A3 and A4 control each axis, and the control of the A3 axis is idling by the motor of A2, and the pulley and timing belt. The variable values generated by the vision and ultrasonic sensors were input through Arduino, and the stepper motor was controlled through the calculated variable values.

In addition, the 4-axis surgical hand tracking stand rotates the motor and timing belt through data and algorithms generated from two types of sensors, and the stand structure is manufactured using a 3D printer of the fused deposition modeling method [14].

2.2 Control algorithm

To track a hand, it is necessary to specify the coordinates of the hand in a 3D space. A stand that rotates around an axis can be expressed more easily with a cylindrical coordinate system of r, θ, and z, as shown in Figure 1 than a 3-axis Cartesian coordinate system. We consider the distance between the hand and the end of the stand as r, the angle with the hand as θ, and the height between the end of the stand and the reference plane as z. An ultrasonic sensor was used to measure the distance (r) between the hand and end of the stand. The ultrasonic sensor is divided into a transmitter and a receiver, which emit short ultrasonic pulses at regular intervals, receive the echo signal that hits an object and returns from the receiver, and calculate the distance based on the time difference. The time (t) taken for the ultrasonic wave to move 1 cm can be obtained using Equation (1). The angle (θ) with the hand can be obtained using Equation (2), Equation (3).

| (1) |

| (2) |

| (3) |

A vision sensor was used to measure θ. When the vision sensor executes object tracking, a rectangular area is designated for the recognized object and the movement value of θ is determined by comparing the center point of the box with the coordinate system of the monitor of the vision sensor. The height (z) between the end of the stand and the reference plane was the same as r obtained using the ultrasonic sensor. However, z is determined by measuring the direction parallel to the z-axis.

The distance r between the hand and the end of the stand was measured with an ultrasonic sensor, and a total of four cases were set, as shown in Table 1.

In the case of r ≥ 1000 mm in Table 1, it was determined that the position of the hand was missed, and the motion was stopped until the hand was detected again. If r ≥ 260 mm, the distance between the hand and the end of the stand was long, hence, the action of extending the arm was used to reduce the distance between the hands. For the case of r≤ 220 mm in Table 1, because the distance from the hand is small, the arm is bent to move the distance away from the hand. For a case different from the aforementioned conditions, it is considered that the distance between the hands is appropriate, and the operation is stopped. The rotation of the stepper motor and the distance of the ultrasonic sensor are generated for the operation of r ≤ 220 mm; the last case given in Table 1 is a control operation, and it is repeatedly executed until an appropriate distance is reached.

In Table 2, the height z between the end of the stand and the reference plane was measured using an ultrasonic sensor in the same way as r, and the operation based on the r value was classified into the following three categories. For the case of z ≥ 80 mm in Table 2, because the distance from the reference plane is far, the arm-lowering action moves the distance closer to the reference plane. In the case of z ≤40 mm, because the distance to the reference plane is close, the arm-raising motion moves the distance away from the reference plane. If the value of z differs from the above-mentioned cases, it is considered that the distance to the reference plane is appropriate, and the operation is stopped. For the cases, z ≥80 mm and z ≤ 40 mm, stepper motor is rotated one step at a time and the distance with an ultrasonic sensor is measured as one operation to achieve an appropriate distance.

In Table 3, the angle θ with the hand was measured using a vision sensor, and the three algorithms were set as follows. For the case of θ ≥200° in Table 3, the hand is positioned to the left rather than the angle of the stand, and rotates to the left to match the direction. In the case of θ ≤ 180° , the hand was positioned to the right and rotated to the right to match the direction. For all other cases, it is considered that the angles of the hand and the stand are the same and the rotation in the θ direction is stopped.

2.3 Experiment procedure

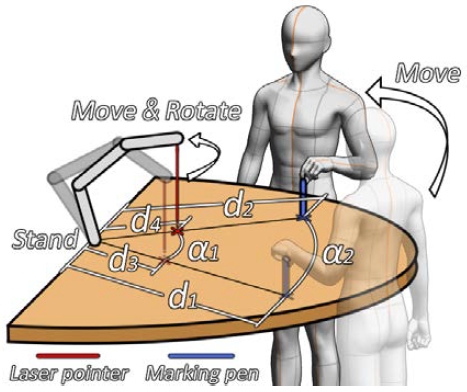

We conducted two experiments to assess the performance of the light stand, variable calculation ability, and hand tracking ability. The experimental procedures were as follows. First, we verified the variable calculation ability by measuring the distance, direction, and height between the hand and ultrasonic sensors after detecting the hand with a vision sensor. Second, we observed the hand-tracking ability of the stand, as shown in Figure 2. The stand position was tracked using a laser pointer. The hand position was tracked using a marking pen. The distances between the hand and center of the stand before and after tracking were d1 and d2, respectively. The distances between the laser pointer and the center of the stand were d3 and d4, respectively. The rotation angles of the laser pointer and hand from the initial to the end position were α1 and α2, respectively.

After collecting the experimental data, the error between the target and actual values was calculated. The target-tracking distance can be calculated using d1 − d2. The actual tracking distances were d3 − d4. The target tracking angle was α2, and actual tracking angle was α1. The tracking difference was calculated by subtracting the actual value from the target value.

3. Experimental results

3.1 Distance and angle measurement

The experimental results for recognizing and operating the initial hand position are listed in Table 4. D is d1 − d3, which indicates the distance between the laser pointer and hand, θ is the position of the hand on the vision sensor screen, and z is the height difference between the stand and floor.

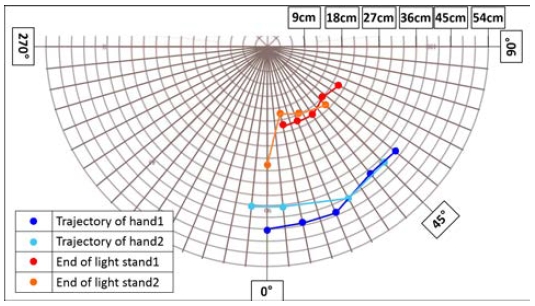

Figure 3 shows the results of the standing trajectory. In Figure 3, the distance moved by the hand (d1 − d2) is indicated as the target value, the distance traveled by the laser pointer (d3 − d4) is the parameter, and the angle between the hand and the laser pointer moved is indicated by α. The deviation in the actual travel distance is expressed as a difference.

Consequently, r showed a deviation of 4-21 mm and α showed a deviation of 0-11.25°. As the distance to be moved increased, a high deviation was observed. However, this was within the tolerance range of the sensor set in the algorithm.

4. Discussion

The ultrasonic sensor is located at the arm end of the stand. Hence, it is not perpendicular to the hand or floor, and the ultrasonic sensor moves together owing to the vibration of the motor during operation. To improve this, by attaching the sensor and iron plate together, the center of gravity is directed downward to reduce the vibration of the sensor. Consequently, a difference of more than 30 mm was significantly improved with a difference of 10–20 mm. As in the case of the ultrasonic sensor, it is difficult for the vision sensor to track the hand owing to the shaking caused by the vibration of the motor. To improve this condition, the vision sensor was attached to the base plate to reduce vibration and rotate it with the axis to reduce the difficulty of hand tracking. Therefore, the stand was able to track the hand very smoothly within the set measurement range, and it was confirmed that the error was within the acceptable range.

5. Conclusion

The experimental results showed an error of 5–10 mm between the hand and light stand. This error occurs because the ultrasonic sensor and the vision sensor are located at the end of the stand arm, hence, they are not perpendicular to the hand or the floor, and the vibration of the sensor is due to the vibration of the motor during operation. By attaching an iron plate to the ultrasonic sensor, the center of gravity was lowered to reduce the movement of the bearing, thereby reducing the error by approximately 0–5 mm. It is believed that it can be used as an automatic hand tracking light stand system with a small difference that the medical personnel cannot distinguish when the diameter of the light is assumed as approximately 100 mm.

In the future, if we divide the step angle of the motor and move it at a smaller angle per step to rotate the motor smoothly, the vibration received by the sensor will be reduced, resulting in a more sophisticated automatic light system.

Acknowledgments

This research was supported by Academic Support Project in the National Korea Maritime & Ocean University Research Fund (#2022-0333-01).

This paper is an expanded version of the proceeding paper entitled “Automatic hand tracking system control with 4-axis light stand for surgical lights” presented at the ICACE 2022.

Author Contributions

Conceptualization, S. Oh and J. Ko; Software, H. Kim, D. Kim and I. Kim; Formal Analysis, H. Kim, D. Kim, I. Kim and H. Kim; Investigation, S. Oh, H. Kim and D. Kim; Resources, J. Ko; Data Curation, S. Oh and H. Kim; Writing-Original Draft Preparation, S. Oh, H. Kim, D. Kim and I. Kim; Writing-Review & Editing, H. Kim and J. Ko; Visualization, S. Oh, H. Kim, D. Kim and I. Kim; Supervision, J. Ko; Project Administration, J. Ko.

References

-

J. Kim, S. Yang, S. Kim, and H. Kim, “Application of robots in general surgery,” Journal of the Korean Medical Association, vol. 64, no. 10, pp. 678-687, 2021 (in Korean).

[https://doi.org/10.5124/jkma.2021.64.10.678]

- M. Song and Y. Cho, “The present and future of medical robots: Focused on surgical robots,” Journal of Digital Convergence, vol. 19, no. 4, pp. 349-353, 2021 (in Korean).

- K. Kim, “Challenge and problem of medical robot surgery research,” Journal of Biomedical Engineering Research, vol. 30. no. 4, pp. 271-278, 2009 (in Korean).

- H. Jung, H, Song, J. Woo, and S. Park, “Development of control and HMI for safe robot assisted minimally invasive surgery,” Journal of the Korean Society for Precision Engineering, vol. 28, no. 9, pp. 1048-1053, 2011 (in Korean).

- S. Kim, Y. Cho, “Development trends and use cases of medical service robots: Focused on logistics, guidance, and drug processing robots,” Journal of Digital Convergence, vol.19, no. 2, pp. 523-529, 2021 (in Korean).

-

X. Bao, S. Guo, N. Xiao, et al., “Toward cooperation of catheter and guidewire for remote-controlled vascular interventional robot,” proceedings of the 2017 IEEE International Conference on Mechatronics and Automation, pp. 422-426, 2017.

[https://doi.org/10.1109/ICMA.2017.8015854]

-

S. Guo, Y. Wang, N Xiao, et al., “Study on real-time force feedback for a master-slave interventional surgical robotic system,” Biomedical Microdevices, vol. 20, no. 2, pp. 37, 2018.

[https://doi.org/10.1007/s10544-018-0278-4]

-

Y. Zhao, S. Guo, N. Xiao, et al., “Operating force information on-line acquisition of a novel slave manipulator for vascular interventional surgery,” Biomedical Microdevices, vol. 20, no. 2, p. 33, 2018.

[https://doi.org/10.1007/s10544-018-0275-7]

-

Y. Wang, S. Guo, N Xiao, et al., “Online measuring and evaluation of guidewire inserting resistance for robotic interventional surgery systems,” Microsystem Technologies, vol. 24, pp. 3467-3477, 2018.

[https://doi.org/10.1007/s00542-018-3750-4]

-

X. Yin, S. Guo, H. Hirata, and H. Ishihara, “Design and experimental evaluation of a teleoperated haptic robot assisted catheter operating system,” Journal of Intelligent Material Systems and Structures, vol. 27, no. 1, pp. 3-16, 2016.

[https://doi.org/10.1177/1045389X14556167]

- J. Choi, S. Han, H. Park, and J. Park, “Hand shape recognition using hybrid camera,” Journal of Korean Dance, vol. 9, no. 1, pp. 199-219, 2013 (in Korean).

-

H. Park, K. Ahn, J. Min, and J. Song, “5 DOF home robot arm based on counterbalance mechanism,” Journal of the Korean Society of Robotics, vol. 15, pp. 48-54, 2020 (in Korean).

[https://doi.org/10.7746/jkros.2020.15.1.048]

-

S. Lee, “Kinematic analysis and development of robot simulator for open-source six-degree-of-freedom robot arm,” Journal of the Korean Society of Manufacturing Technology Engineers, vol. 31, no. 5, pp. 338-345, 2022 (in Korean).

[https://doi.org/10.7735/ksmte.2022.31.5.338]