A study on selective dehazing system based on haze detection network

Copyright © The Korean Society of Marine Engineering

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License (http://creativecommons.org/licenses/by-nc/3.0), which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

Image dehazing, which aims to recover a clear image solely from a hazy or foggy image, is a particularly challenging task. Many studies have recently been conducted to improve the performance of image dehazing using deep neural networks. However, existing approaches do not consider changes in haze density, and thus even if a clear image is input, distortions such as sharpening may occur. In addition, because the number of datasets available in deep learning, whose contents are image pairs of hazy and corresponding haze-free (ground truth) indoor images, is quite limited, the haze removal performance may be reduced. To solve this problem, in this paper, a selective dehazing system is proposed that combines a haze detection network and a dehazing network. The proposed haze detection network is designed using a CNN structure to determine the haze density of the input image, and the use of the dehazing network is determined. The proposed dehazing network uses the U-Net model to efficiently learn only a limited number of datasets. To evaluate the performance of the proposed network, only 45 O-Hazes were used. The result of haze detection shows that the probability of detecting a haze image is more than 99% and the probability of detecting a haze-free image is 97.9%. Dehazing evaluation results improved the PSNR and SSIM by more than 10% compared to existing networks.

Keywords:

Image processing, Deep neural network, Dehazing, Haze detection1. Introduction

To deal with the recent increase in demand for camera-based autonomous systems, such as autonomous driving, drones, and monitoring systems, owing to the development of computing devices, research on neural-network-based image processing technologies such as object recognition, segmentation, and depth estimation has been continuously conducted. Neural-network-based models rapidly degrade the performance when the quality of the input image is poor owing to various environmental factors. If the cause of deteriorated image quality is a hardware problem during the image collection process, the device can be replaced to address this issue. If the quality degradation factor of the image is a hardware problem during the image collection process, it is solved by replacing the device, but if it is caused by weather phenomena such as sea blight, mountain fog, or yellow dust, the input image will contain noise from light, and image processing is therefore necessary. Haze, including weather phenomena, is caused by atmospheric steam and dust, increasing the scattering of light and reducing the visibility. As a result, the amount of input information is reduced in the general image processing algorithm, and the accuracy is lowered. In a neural-network-based image processing model, the input is distorted, and thus an unexpected output may be generated. Therefore, recent studies on using neural networks to remove haze are being actively conducted in the field of computer vision [1]-[3].

Zhang [4] developed a representative dehazing network that calculates a haze-free image after generating scattering light A and the transmission coefficient t(x) of the atmospheric scattering model using a GAN. However, because a U-Net-based encoder is used for scattering light generation, it is difficult to use convolutional layer flattening and a multi-scale fully connected layer when producing the transmission amount.

D. Engin et al. [5] suggested an efficient network by learning two models using one image with a cycle-GAN-based haze-free image generator and a haze image generator. However, owing to the characteristics of a cycle GAN, more than twice the learning time is consumed compared to general CNN-based networks, and because the size of the haze dataset is extremely small, it is difficult to sufficiently learn and does not achieve an excellent performance.

In this paper, for the commercialization of dehazing networks, we propose a selective dehazing system that applies only to images requiring haze removal. The proposed system is composed of a haze detection network that is minimized to maintain the level of performance and a dehazing network that receives selective inputs based on the haze detection results. Thus, the average number of computations is reduced.

2. Related Studies

2.1 Atmospheric Scattering Model

To describe the composition of hazy images, McCartney [6] first proposed the use of an atmospheric scattering model, which was developed by Narasimhan and Nayer [7][8]. Equation (1) describes the atmospheric scattering model.

| (1) |

Here, I is the observed hazy image, J is the true scene radiance, A is the global atmospheric light, t is the medium transmission map, and x is the index pixel. In addition, J(x) can be reconstructed into I(x) by A and t, and t(x) refers to the portion of light that reaches the camera without being scattered. Moreover, t(x) is composed of the depth d(x) and the scattering coefficient of atmosphere β and is defined through Equation (2).

| (2) |

Because it is impossible to measure all variables of the atmospheric scattering model using current sensors, most studies on haze limit the number of variables to two.

2.2 Single Image Dehazing

Dehazing methods with single image inputs can be separated into methods using information in the image and learning-based approaches. The method using information in the image starts with a model for estimating the optical transmission [9], the dark channel prior (DCP) [10] based on the statistics of haze-free images, and the color attenuation prior (CAP) [11] for reconstructing the depth information. Many other studies are also ongoing. Learning-based methods using neural networks, which exhibit an excellent performance in various fields where images are input, generally include CNN- and GAN-based approaches. The CNN-based method aims to reconstruct the true scene radiance by estimating the global atmospheric light and the medium transmission map of the atmospheric scattering model [12]-[14]. These methods comprise stacking a large number of convolutional layers, and thus an estimate takes an excessive amount of time to achieve because the number of parameters is large.

2.3 Haze Detection

Image dehazing is typically composed of a stack of convolutional layers, requiring a high-performance GPU. For this reason, it is difficult to enter all images into the dehazing network when constructing the dehazing system. Therefore, to reduce the load on the hardware, a haze detection network that detects the presence of haze and determines whether a dehazing network must be used is required. Studies on haze detection have been conducted in parallel with haze removal, and methods using image processing include the dark channel prior [10], which assumes colorful images, and a fast semi-inverse approach [17] that generalizes pixel-based operation. As neural networks continue to be applied to various fields, research on haze using CNN and GAN methods will increase.

3. Proposed Method

3.1 System Overview

In this paper, for a real-time automated monitoring system, we propose a selective dehazing system that determines the existence of haze and removes it from the image.

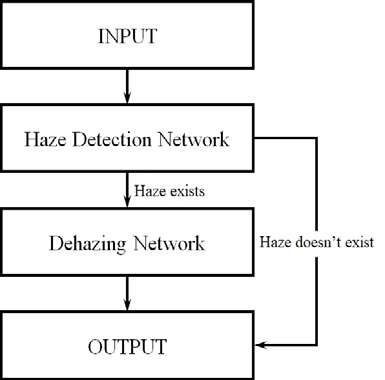

The proposed system is shown in Figure 1, and the haze detection network determines whether haze exists in the input image. If haze is present, it is input into the dehazing network and outputs the image with the haze removed. If haze does not exist, the input image is output. The detection network consists of a convolutional layer and a fully connected layer, and outputs the probability of haze to determine whether it is input to the dehazing network. The dehazing network is an encoder–decoder architecture that is an improved U-Net [18] and removes haze in the input image according to the judgment of the detection network.

3.2 Haze Detection Network

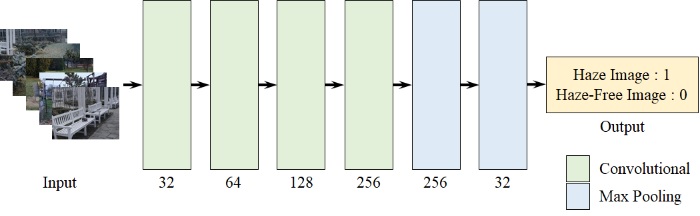

The haze detection network outputs the probability of haze being present in the input image and uses only the minimum layer to maintain the performance and reduce the number of computations in the dehazing system.

Figure 2 shows the architecture of the haze detection network, which is composed of four convolutional layers and two fully connected layers to determine the probability of haze presence throughout the image for feature extraction. To extract local features in the image and minimize the number of computations, the kernel size of the convolutional layer is set to (7, 7), the stride is set to (3, 3), and the padding is not set. As a result, the number of parameters in the flattening process is minimized. The proposed network is trained for cross-entropy loss used in binary classification, and outputs a 1 when haze exists and a 0 otherwise.

3.3 Dehazing Network

A dehazing network is a network that estimates haze-free images from images where haze exists in the haze detection network.

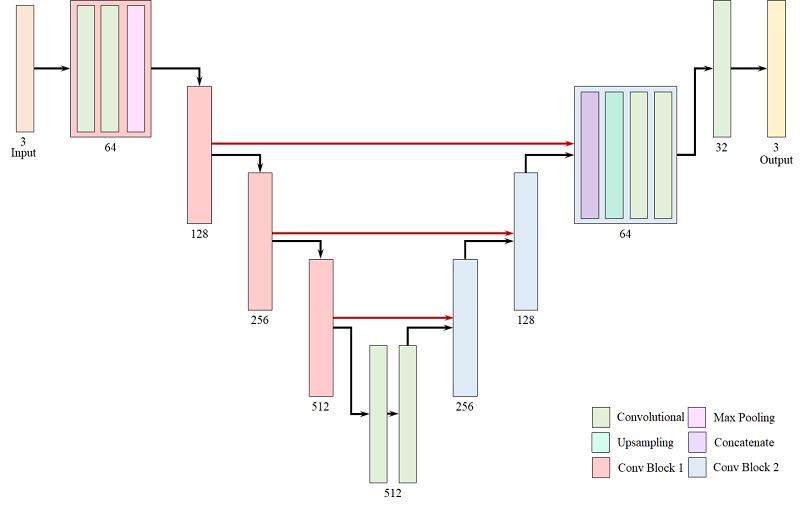

Figure 3 shows the architecture of the dehazing network and is an encoder–decoder structure that extracts strong features in an image by increasing the number of channels while reducing the size of the image. The encoder consists of four convolutional blocks consisting of two layers, i.e., a convolutional layer and a max pooling layer, and the decoder consists of three convolutional blocks consisting of a concatenate layer, an up-sampling layer, and two convolutional layers. The concatenate layer of the decoder is an input data mirroring process that compensates for pixel loss in the image boundary area that occurs during down-sampling. The proposed network was trained using the mean squared error between a haze-free image and output, and using a large number of convolutional layers, it has a large number of computations, demonstrating an excellent dehazing performance.

4. Experiment

4.1 Datasets

In the O-haze dataset [19], which is the input of the experiment, haze was generated using two commercial haze generating machines to create an environment in which the haze is evenly distributed. The dataset consists of 45 different outdoor images consisting of hazy and haze-free images. The dataset consists of 45 different outdoor images, consisting of a hazy image and a haze-free image.

4.2 Detail of Training

The proposed network is based on Python 3.7 and Tensorflow 2.3.1, and the GPU is an NVIDIA QUADRO RTX 8000. In this experiment, the Adam Optimizer with a learning rate of 0.001 was used, and the batch size was 32. The number of training data was 35; the number of validation data was 5; and the number of testing data was 5. The complete learning process was applied 100 times.

4.3 Haze Detection Evaluation

Table 1 shows the haze detection network evaluation conducted on a total of 10 haze images as the input data of the system, and the ground truth, which is a haze-free image. If the output is greater than 0.5, the image has haze, and at less than 0.5, the image is judged as a haze-free image, and the haze detection performance is 100%. However, when inputting the ground truth of O-haze 36, the probability of an image having haze was estimated at 10%. This is believed to be because the ratio of the sky area in the image is high, as is the intensity. In addition, to verify the haze detection network, two images captured on a day of sea fog occurrence near Korea Maritime University were input, and both were 0.999, confirming the existence of haze.

4.4 Dehazing Evaluation

Because the output of the dehazing network is an image, it was divided into quantitative and qualitative evaluations. A quantitative evaluation used the PSNR and SSIM, which are indicators for evaluating an estimated image. Table 2 shows the results of the quantitative evaluation. The PSNR of the proposed dehazing network improved by more than 1.5 points, and the SSIM improved by more than 10% compared to CVPR'16, ICCV'17, and CVPR'18. In addition, the haze detection network is valid because the output is distorted, and the number of operations is consumed when inputting the ground truth into the proposed network.

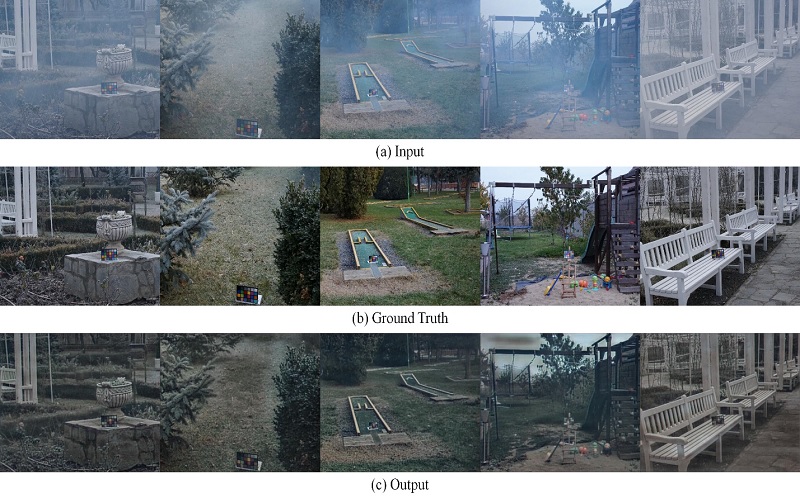

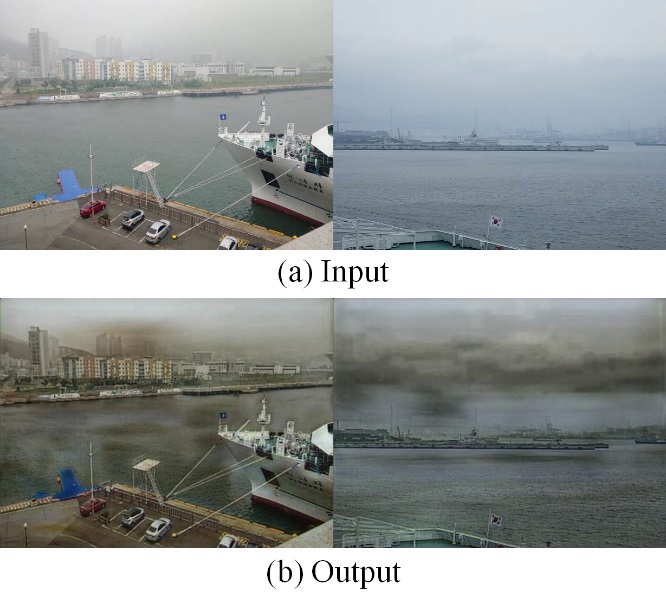

Figure 4 shows the haze image of the testing data, ground truth image, and output of the dehazing network. Although the haze was removed, the intensity of the output decreased, and the stains of the color in contrast with the original were confirmed in the floor and sky areas of a similar color. Figure 5 shows the results of the image and dehazing network taken near the Korea Maritime University. Although the buildings were difficult to identify from the original image, owing to the removal of haze, the stains in contrast with the sky and sea areas were confirmed, such as in the test data of the O-haze dataset.

5. Conclusion

In this paper, we proposed a selective dehazing system that removes haze by inputting it into the dehazing network after confirming its presence through a haze detection network. In addition, the performances of the haze detection network and dehazing network were evaluated and tested. Considering that 35 data points were used for learning the proposed system, an excellent performance is achieved. If data on haze can be compiled, the performance can be improved. It will be possible to establish an efficient monitoring system if the proposed system is applied to CCTV systems installed in areas where haze occurs in coastal and mountainous areas. In the future, we are planning to carry out research on dehazing networks specialized in marine environments through a dataset including events occurring at sea.

Acknowledgments

“This research was supported by the MKE(The Ministry of Knowledge Economy), Korea, under IT/SW Creative research program supervised by the NIPA(National IT Industry Promotion Agency)”(A1801-20-1003)

Author Contributions

Conceptualization, H. I. Seo and D. H. Seo; Methodology, H. I. Seo and J. H. Seong; Software, H. I. Seo and J. W. Bae; Formal Analysis, H. I. Seo; Investigation, H. I. Seo and J. W. Bae; Resources, H. R. Cho, J. H. Seong, and D. H. Seo; Data Curation, H. I. Seo, J. W. Bae, and J. H. Seong; Writing-Original Draft Preparation, H. I. Seo; Writing-Review & Editing, H. I. Seo, H. R. Cho, and J. H. Seong; Visualization, J. W. Bae; Supervision, J. H. Seong and D. H. Seo; Project Administration, D. H. Seo; Funding Acquisition, D. H. Seo.

References

- C. Ancuti, et al., “NTIRE 2018 challenge on image dehazing: Methods and results,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 891-901, 2018.

- C. O. Ancuti, et al., “NTIRE 2019 image dehazing challenge report,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 0-0, 2019.

- C. O. Ancuti, et al., “NTIRE 2020 challenge on nonhomogeneous dehazing,” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 490-491, 2020.

-

H. Zhang and V. M. Patel, “Densely connected pyramid dehazing network,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3194-3203, 2018.

[https://doi.org/10.1109/CVPR.2018.00337]

-

D. Engin, et al., “Cycle-dehaze: Enhanced CycleGAN for single image dehazing,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 825-833, 2018.

[https://doi.org/10.1109/CVPRW.2018.00127]

- E. J. McCartney, Optics of the Atmosphere: Scattering by Molecules and Particles, New York, USA: John Wiley and Sons, 1976.

-

S. G. Narasimhan and S. K. Nayar, “Contrast restoration of weather degraded images,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 25, no. 6, pp 713-724, 2003.

[https://doi.org/10.1109/TPAMI.2003.1201821]

-

S. K. Nayar and S. G. Narasimhan, “Vision in bad weather,” Proceedings of the Seventh IEEE International Conference on Computer Vision, vol. 2, pp. 820-827, 1999.

[https://doi.org/10.1109/ICCV.1999.790306]

-

P. S. Chavez Jr., “An improved dark-object subtraction technique for atmospheric scattering correction of multispectral data,” Remote Sensing of Environment, vol. 24, no. 3, pp. 459-479, 1988.

[https://doi.org/10.1016/0034-4257(88)90019-3]

-

K. He, et al., “Single image haze removal using dark channel prior,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 33, no. 12, pp. 2341-2353, 2010.

[https://doi.org/10.1109/TPAMI.2010.168]

-

Q. Zhu, et al., “A fast single image haze removal algorithm using color attenuation prior,” IEEE Transactions on Image Processing, vol. 24, no. 11, pp. 3522-3533, 2015.

[https://doi.org/10.1109/TIP.2015.2446191]

-

B. Cai, et al., “DehazeNet: An end-to-end system for single image haze removal,” IEEE Transactions on Image Processing, vol. 25, no.11, pp. 5187-5198, 2016.

[https://doi.org/10.1109/TIP.2016.2598681]

-

R. Mondal, et al., “Image dehazing by joint estimation of transmittance and airlight using bi-directional consistency loss minimized FCN,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 920-928, 2018.

[https://doi.org/10.1109/CVPRW.2018.00137]

-

W. Ren, et al., “Single image dehazing via multi-scale convolutional neural networks,” European Conference on Computer Vision, pp. 154-169, 2016.

[https://doi.org/10.1007/978-3-319-46475-6_10]

- S. Ki, et al., “Fully end-to-end learning based conditional boundary equilibrium GAN with receptive field sizes enlarged for single ultra-high resolution image dehazing,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 817-824, 2018.

- Y. Qu, et al., “Enhanced pix2pix dehazing network,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8160-8168, 2019.

-

C. O. Ancuti, et al., “A fast semi-inverse approach to detect and remove the haze from a single image,” Asian Conference on Computer Vision, pp. 501-514, 2010.

[https://doi.org/10.1007/978-3-642-19309-5_39]

-

O. Ronneberger, et al., “U-net: Convolutional networks for biomedical image segmentation,” International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 234-241, 2015.

[https://doi.org/10.1007/978-3-319-24574-4_28]

-

C. O. Ancuti, et al., “O-haze: a dehazing benchmark with real hazy and haze-free outdoor images,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 754-762, 2018.

[https://doi.org/10.1109/CVPRW.2018.00119]

-

B. Cai, et al., “DehazeNet: An end-to-end system for single image haze removal,” IEEE Transactions on Image Processing, vol. 25, no. 11, pp. 5187-5198, 2016.

[https://doi.org/10.1109/TIP.2016.2598681]

- B. Li, et al., “AOD-Net: All-in-one dehazing network,” Proceedings of the IEEE International Conference on Computer Vision, 2017.

-

H. Zhang, et al., “Densely connected pyramid dehazing network,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3194-3203, 2018.

[https://doi.org/10.1109/CVPR.2018.00337]